Now that my research project ‘Digital technologies and public procurement. Gatekeeping and experimentation in digital public governance’ nears its end, some outputs start to emerge. In this post, I would like to highlight two policy briefings summarising some of my top-level policy recommendations, and providing links to more detailed analysis. All materials are available in the ‘Digital Procurement Governance’ tab.

Digital procurement, PPDS and multi-speed datafication -- some thoughts on the March 2023 PPDS Communication

The 2020 European strategy for data ear-marked public procurement as a high priority area for the development of common European data spaces for public administrations. The 2020 data strategy stressed that

Public procurement data are essential to improve transparency and accountability of public spending, fighting corruption and improving spending quality. Public procurement data is spread over several systems in the Member States, made available in different formats and is not easily possible to use for policy purposes in real-time. In many cases, the data quality needs to be improved.

To address those issues, the European Commission was planning to ‘Elaborate a data initiative for public procurement data covering both the EU dimension (EU datasets, such as TED) and the national ones’ by the end of 2020, which would be ‘complemented by a procurement data governance framework’ by mid 2021.

With a 2+ year delay, details for the creation of the public procurement data space (PPDS) were disclosed by the European Commission on 16 March 2023 in the PPDS Communication. The procurement data governance framework is now planned to be developed in the second half of 2023.

In this blog post, I offer some thoughts on the PPDS, its functional goals, likely effects, and the quickly closing window of opportunity for Member States to support its feasibility through an ambitious implementation of the new procurement eForms at domestic level (on which see earlier thoughts here).

1. The PPDS Communication and its goals

The PPDS Communication sets some lofty ambitions aligned with those of the closely-related process of procurement digitalisation, which the European Commission in its 2017 Making Procurement Work In and For Europe Communication already saw as not only an opportunity ‘to streamline and simplify the procurement process’, but also ‘to rethink fundamentally the way public procurement, and relevant parts of public administrations, are organised … [to seize] a unique chance to reshape the relevant systems and achieve a digital transformation’ (at 11-12).

Following the same rhetoric of transformation, the PPDS Communication now stresses that ‘Integrated data combined with the use of state-of the-art and emerging analytics technologies will not only transform public procurement, but also give new and valuable insights to public buyers, policy-makers, businesses and interested citizens alike‘ (at 2). It goes further to suggest that ‘given the high number of ecosystems concerned by public procurement and the amount of data to be analysed, the impact of AI in this field has a potential that we can only see a glimpse of so far‘ (at 2).

The PPDS Communication claims that this data space ‘will revolutionise the access to and use of public procurement data:

It will create a platform at EU level to access for the first time public procurement data scattered so far at EU, national and regional level.

It will considerably improve data quality, availability and completeness, through close cooperation between the Commission and Member States and the introduction of the new eForms, which will allow public buyers to provide information in a more structured way.

This wealth of data will be combined with an analytics toolset including advanced technologies such as Artificial Intelligence (AI), for example in the form of Machine Learning (ML) and Natural Language Processing (NLP).’

A first comment or observation is that this rhetoric of transformation and revolution not only tends to create excessive expectations on what can realistically be delivered by the PPDS, but can also further fuel the ‘policy irresistibility’ of procurement digitalisation and thus eg generate excessive experimentation or investment into the deployment of digital technologies on the basis of such expectations around data access through PPDS (for discussion, see here). Policy-makers would do well to hold off on any investments and pilot projects seeking to exploit the data presumptively pooled in the PPDS until after its implementation. A closer look at the PPDS and the significant roadblocks towards its full implementation will shed further light on this issue.

2. What is the PPDS?

Put simply, the PPDS is a project to create a single data platform to bring into one place ‘all procurement data’ from across the EU—ie both data on above threshold contracts subjected to mandatory EU-wide publication through TED (via eForms from October 2023), and data on below threshold contracts, which publication may be required by the domestic laws of the Member States, or entirely voluntary for contracting authorities.

Given that above threshold procurement data is already (in the process of being) captured at EU level, the PPDS is very much about data on procurement not covered by the EU rules—which represents 80% of all public procurement contracts. As the PPDS Communication stresses

To unlock the full potential of public procurement, access to data and the ability to analyse it are essential. However, data from only 20% of all call for tenders as submitted by public buyers is available and searchable for analysis in one place [ie TED]. The remaining 80% are spread, in different formats, at national or regional level and difficult or impossible to re-use for policy, transparency and better spending purposes. In order (sic) words, public procurement is rich in data, but poor in making it work for taxpayers, policy makers and public buyers.

The PPDS thus intends to develop a ‘technical fix’ to gain a view on the below-threshold reality of procurement across the EU, by ‘pulling and pooling’ data from existing (and to be developed) domestic public contract registers and transparency portals. The PPDS is thus a mechanism for the aggregation of procurement data currently not available in (harmonised) machine-readable and structured formats (or at all).

As the PPDS Communication makes clear, it consists of four layers:

(1) A user interface layer (ie a website and/or app) underpinned by

(2) an analytics layer, which in turn is underpinned by (3) an integration layer that brings together and minimally quality-assures the (4) data layer sourced from TED, Member State public contract registers (including those at sub-national level), and data from other sources (eg data on beneficial ownership).

The two top layers condense all potential advantages of the PPDS, with the analytics layer seeking to develop a ‘toolset including emerging technologies (AI, ML and NLP)‘ to extract data insights for a multiplicity of purposes (see below 3), and the top user interface seeking to facilitate differential data access for different types of users and stakeholders (see below 4). The two bottom layers, and in particular the data layer, are the ones doing all the heavy lifting. Unavoidably, without data, the PPDS risks being little more than an empty shell. As always, ‘no data, no fun’ (see below 5).

Importantly, the top three layers are centralised and the European Commission has responsibility (and funding) for developing them, while the bottom data layer is decentralised, with each Member State retaining responsibility for digitalising its public procurement systems and connecting its data sources to the PPDS. Member States are also expected to bear their own costs, although there is EU funding available through different mechanisms. This allocation of responsibilities follows the limited competence of the EU in this area of inter-administrative cooperation, which unfortunately heightens the risks of the PPDS becoming little more than an empty shell, unless Member States really take the implementation of eForms and the collaborative approach to the construction of the PPDS seriously (see below 6).

The PPDS Communication foresees a progressive implementation of the PPDS, with the goal of having ‘the basic architecture and analytics toolkit in place and procurement data published at EU level available in the system by mid-2023. By the end of 2024, all participating national publication portals would be connected, historic data published at EU level integrated and the analytics toolkit expanded. As of 2025, the system could establish links with additional external data sources’ (at 2). It will most likely be delayed, but that is not very important in the long run—especially as the already accrued delays are the ones that pose a significant limitation on the adequate rollout of the PPDS (see below 6).

3. PPDS’ expected functionality

The PPDS Communication sets expectations around the functionality that could be extracted from the PPDS by different agents and stakeholders.

For public buyers, in addition to reducing the burden of complying with different types of (EU-mandated) reporting, the PPDS Communication expects that ‘insights gained from the PPDS will make it much easier for public buyers to

team up and buy in bulk to obtain better prices and higher quality;

generate more bids per call for tenders by making calls more attractive for bidders, especially for SMEs and start-ups;

fight collusion and corruption, as well as other criminal acts, by detecting suspicious patterns;

benchmark themselves more accurately against their peers and exchange knowledge, for instance with the aim of procuring more green, social and innovative products and services;

through the further digitalisation and emerging technologies that it brings about, automate tasks, bringing about considerable operational savings’ (at 2).

This largely maps onto my analysis of likely applications of digital technologies for procurement management, assuming the data is there (see here).

The PPDS Communication also expects that policy-makers will ‘gain a wealth of insights that will enable them to predict future trends‘; that economic operators, and SMEs in particular, ‘will have an easy-to-use portal that gives them access to a much greater number of open call for tenders with better data quality‘, and that ‘Citizens, civil society, taxpayers and other interested stakeholders will have access to much more public procurement data than before, thereby improving transparency and accountability of public spending‘ (at 2).

Of all the expected benefits or functionalities, the most important ones are those attributed to public buyers and, in particular, the possibility of developing ‘category management’ insights (eg potential savings or benchmarking), systems of red flags in relation to corruption and collusion risks, and the automation of some tasks. However, unlocking most of these functionalities is not dependent on the PPDS, but rather on the existence of procurement data at the ‘right’ level.

For example, category management or benchmarking may be more relevant or adequate (as well as more feasible) at national than at supra-national level, and the development of systems of red flags can also take place at below-EU level, as can automation. Importantly, the development of such functionalities using pan-EU data, or data concerning more than one Member State, could bias the tools in a way that makes them less suited, or unsuitable, for deployment at national level (eg if the AI is trained on data concerning solely jurisdictions other than the one where it would be deployed).

In that regard, the expected functionalities arising from PPDS require some further thought and it can well be that, depending on implementation (in particular in relation to multi-speed datafication, as below 5), Member States are better off solely using domestic data than that coming from the PPDS. This is to say that PPDS is not a solid reality and that its enabling character will fluctuate with its implementation.

4. Differential procurement data access through PPDS

As mentioned above, the PPDS Communication stresses that ‘Citizens, civil society, taxpayers and other interested stakeholders will have access to much more public procurement data than before, thereby improving transparency and accountability of public spending’ (at 2). However, this does not mean that the PPDS will be (entirely) open data.

The Communication itself makes clear that ‘Different user categories (e.g. Member States, public buyers, businesses, citizens, NGOs, journalists and researchers) will have different access rights, distinguishing between public and non-public data and between participating Member States that share their data with the PPDS (PPDS members, …) and those that need more time to prepare’ (at 8). Relatedly, ‘PPDS members will have access to data which is available within the PPDS. However, even those Member States that are not yet ready to participate in the PPDS stand to benefit from implementing the principles below, due to their value for operational efficiency and preparing for a more evidence-based policy’ (at 9). This raises two issues.

First, and rightly, the Communication makes clear that the PPDS moves away from a model of ‘fully open’ or ‘open by default’ procurement data, and that access to the PPDS will require differential permissioning. This is the correct approach. Regardless of the future procurement data governance framework, it is clear that the emerging thicket of EU data governance rules ‘requires the careful management of a system of multi-tiered access to different types of information at different times, by different stakeholders and under different conditions’ (see here). This will however raise significant issues for the implementation of the PPDS, as it will generate some constraints or disincentives for an ambitions implementation of eForms at national level (see below 6).

Second, and less clearly, the PPDS Communication evidences that not all Member States will automatically have equal access to PPDS data. The design seems to be such that Member States that do not feed data into PPDS will not have access to it. While this could be conceived as an incentive for all Member States to join PPDS, this outcome is by no means guaranteed. As above (3), it is not clear that Member States will be better off—in terms of their ability to extract data insights or to deploy digital technologies—by having access to pan-EU data. The main benefit resulting from pan-EU data only accrues collectively and, primarily, by means of facilitating oversight and enforcement by the European Commission. From that perspective, the incentives for PPDS participation for any given Member State may be quite warped or internally contradictory.

Moreover, given that plugging into PPDS is not cost-free, a Member State that developed a data architecture not immediately compatible with PPDS may well wonder whether it made sense to shoulder the additional costs and risks. From that perspective, it can only be hoped that the existence of EU funding and technical support will be maximised by the European Commission to offload that burden from the (reluctant) Member States. However, even then, full PPDS participation by all Member States will still not dispel the risk of multi-speed datafication.

5. No data, no fun — and multi-speed datafication

Related to the risk that some EU Member States will become PPDS members and others not, there is a risk (or rather, a reality) that not all PPDS members will equally contribute data—thus creating multi-speed datafication, even within the Member States that opt in to the PPDS.

First, the PPDS Communication makes it clear that ‘Member States will remain in control over which data they wish to share with the PPDS (beyond the data that must be published on TED under the Public Procurement Directives)‘ (at 7), It further specifies that ‘With the eForms, it will be possible for the first time to provide data in notices that should not be published, or not immediately. This is important to give assurance to public buyers that certain data is not made publicly available or not before a certain point in time (e.g. prices)’ (at 7, fn 17).

This means that each Member State will only have to plug whichever data it captures and decides to share into PPDS. It seems plain to see that this will result in different approaches to data capture, multiple levels of granularity, and varying approaches to restricting access to the date in the different Member States, especially bearing in mind that ‘eForms are not an “off the shelf” product that can be implemented only by IT developers. Instead, before developers start working, procurement policy decision-makers have to make a wide range of policy decisions on how eForms should be implemented’ in the different Member States (see eForms Implementation Handbook, at 9).

Second, the PPDS Communication is clear (in a footnote) that ‘One of the conditions for a successful establishment of the PPDS is that Member States put in place automatic data capture mechanisms, in a first step transmitting data from their national portals and contract registers’ (at 4, fn 10). This implies that Member States may need to move away from manually inputted information and that those seeking to create new mechanisms for automatic procurement data capture can take an incremental approach, which is very much baked into the PPDS design. This relates, for example, to the distinction between pre- and post-award procurement data, with pre-award data subjected to higher demands under EU law. It also relates to above and below threshold data, as only above threshold data is subjected to mandatory eForms compliance.

In the end, the extent to which a (willing) Member State will contribute data to the PPDS depends on its decisions on eForms implementation, which should be well underway given the October 2023 deadline for mandatory use (for above threshold contracts). Crucially, Member States contributing more data may feel let down when no comparable data is contributed to PPDS by other Member States, which can well operate as a disincentive to contribute any further data, rather than as an incentive for the others to match up that data.

6. Ambitious eForms implementation as the PPDS’ Achilles heel

As the analysis above has shown, the viability of the PPDS and its fitness for purpose (especially for EU-level oversight and enforcement purposes) crucially depends on the Member States deciding to take an ambitious approach to the implementation of eForms, not solely by maximising their flexibility for voluntary uses (as discussed here) but, crucially, by extending their mandatory use (under national law) to all below threshold procurement. It is now also clear that there is a need for as much homogeneity as possible in the implementation of eForms in order to guarantee that the information plugged into PPDS is comparable—which is an aspect of data quality that the PPDS Communication does not seem to have at all considered).

It seems that, due to competing timings, this poses a bit of a problem for the rollout of the PPDS. While eForms need to be fully implemented domestically by October 2023, the PPDS Communication suggests that the connection of national portals will be a matter for 2024, as the first part of the project will concern the top two layers and data connection will follow (or, at best, be developed in parallel). Somehow, it feels like the PPDS is being built without a strong enough foundation. It would be a shame (to put it mildly) if Member States having completed a transition to eForms by October 2023 were dissuaded from a second transition into a more ambitious eForms implementation in 2024 for the purposes of the PPDS.

Given that the most likely approach to eForms implementation is rather minimalistic, it can well be that the PPDS results in not much more than an empty shell with fancy digital analytics limited to very superficial uses. In that regard, the two-year delay in progressing the PPDS has created a very narrow (and quickly dwindling) window of opportunity for Member States to engage with an ambitions process of eForms implementation

7. Final thoughts

It seems to me that limited and slow progress will be attained under the PPDS in coming years. Given the undoubted value of harnessing procurement data, I sense that Member States will progress domestically, but primarily in specific settings such as that of their central purchasing bodies (see here). However, whether they will be onboarded into PPDS as enthusiastic members seems less likely.

The scenario seems to resemble limited voluntary cooperation in other areas (eg interoperability; for discussion see here). It may well be that the logic of EU competence allocation required this tentative step as a first move towards a more robust and proactive approach by the Commission in a few years, on grounds that the goal of creating the European data space could not be achieved through this less interventionist approach.

However, given the speed at which digital transformation could take place (and is taking place in some parts of the EU), and the rhetoric of transformation and revolution that keeps being used in this policy area, I can’t but feel let down by the approach in the PPDS Communication, which started with the decision to build the eForms on the existing regulatory framework, rather than more boldly seeking a reform of the EU procurement rules to facilitate their digital fitness.

Governing the Assessment and Taking of Risks in Digital Procurement Governance

In a previous blog post, I explored the main governance risks and legal obligations arising from the adoption of digital technologies, which revolve around data governance, algorithmic transparency, technological dependency, technical debt, cybersecurity threats, the risks stemming from the long-term erosion of the skills base in the public sector, and difficult trade-offs due to the uncertainty surrounding immature and still changing technologies within an also evolving regulatory framework. To address such risks and ensure compliance with the relevant governance obligations, I stressed the need to embed a comprehensive mechanism of risk assessment in the process of technological adoption.

In a new draft chapter (num 9) for my book project, I analyse how to embed risk assessments in the initial stages of decision-making processes leading to the adoption of digital solutions for procurement governance, and how to ensure that they are iterated throughout the lifecycle of use of digital technologies. To do so, I critically review the model of AI risk regulation that is emerging in the EU and the UK, which is based on self-regulation and self-assessment. I consider its shortcomings and how to strengthen the model, including the possibility of subjecting the process of technological adoption to external checks. The analysis converges with a broader proposal for institutionalised regulatory checks on the adoption of digital technologies by the public sector that I will develop more fully in another part of the book.

This post provides a summary of my main findings, on which I will welcome any comments: a.sanchez-graells@bristol.ac.uk. The full draft chapter is free to download: A Sanchez-Graells, ‘Governing the Assessment and Taking of Risks in Digital Procurement Governance’ to be included in A Sanchez-Graells, Digital Technologies and Public Procurement. Gatekeeping and experimentation in digital public governance (OUP, forthcoming), Available at SSRN: https://ssrn.com/abstract=4282882.

AI Risk Regulation

The emerging (global) model of AI regulation is risk-based—as opposed to a strict precautionary approach. This implies an assumption that ‘a technology will be adopted despite its harms’. This primarily means accepting that technological solutions may (or will) generate (some) negative impacts on public and private interests, even if it is not known when or how those harms will arise, or how extensive they will be. AI are unique, as they are ‘long-term, low probability, systemic, and high impact’, and ‘AI both poses “aggregate risks” across systems and low probability but “catastrophic risks to society”’ [for discussion, see Margot E Kaminski, ‘Regulating the risks of AI’ (2023) 103 Boston University Law Review, forthcoming]

This should thus trigger careful consideration of the ultimate implications of AI risk regulation, and advocates in favour of taking a robust regulatory approach—including to the governance of the risk regulation mechanisms put in place, which may well require external controls, potentially by an independent authority. By contrast, the emerging model of AI risk regulation in the context of procurement digitalisation in the EU and the UK leaves the adoption of digital technologies by public buyers largely unregulated and only subject to voluntary measures, or to open-ended obligations in areas without clear impact assessment standards (which reduces the prospect of effective mandatory enforcement).

Governance of Procurement Digitalisation in the EU

Despite the emergence of a quickly expanding set of EU digital law instruments imposing a patchwork of governance obligations on public buyers, whether or not they adopt digital technologies (see here), the primary decision whether to adopt digital technologies is not subject to any specific constraints, and the substantive obligations that follow from the diverse EU law instruments tend to refer to open-ended standards that require advanced technical capabilities to operationalise them. This would not be altered by the proposed EU AI Act.

Procurement-related AI uses are classified as minimal risk under the EU AI Act, which leaves them subject only to voluntary self-regulation via codes of conduct—yet to be developed. Such codes of conduct should encourage voluntary compliance with the requirements applicable to high-risk AI uses—such as risk management systems, data and data governance requirements, technical documentation, record-keeping, transparency, or accuracy, robustness and cybersecurity requirements—‘on the basis of technical specifications and solutions that are appropriate means of ensuring compliance with such requirements in light of the intended purpose of the systems.’ This seems to introduce a further element of proportionality or ‘adaptability’ requirement that could well water down the requirements applicable to minimal risk AI uses.

Importantly, while it is possible for Member States to draw such codes of conduct, the EU AI Act would pre-empt Member States from going further and mandating compliance with specific obligations (eg by imposing a blanket extension of the governance requirements designed for high-risk AI uses) across their public administrations. The emergent EU model is thus clearly limited to the development of voluntary codes of conduct and their likely content, while yet unknown, seems unlikely to impose the same standards applicable to the adoption of high-risk AI uses.

Governance of Procurement Digitalisation in the UK

Despite its deliberate light-touch approach to AI regulation and actively seeking to deviate from the EU, the UK is relatively advanced in the formulation of voluntary standards to govern procurement digitalisation. Indeed, the UK has adopted guidance for the use of AI in the public sector, and for AI procurement, and is currently piloting an algorithmic transparency standard (see here). The UK has also adopted additional guidance in the Digital, Data and Technology Playbook and the Technology Code of Practice. Remarkably, despite acknowledging the need for risk assessments—and even linking their conduct to spend approvals required for the acquisition of digital technologies by central government organisations—none of these instruments provides clear standards on how to assess (and mitigate) risks related to the adoption of digital technologies.

Thus, despite the proliferation of guidance documents, the substantive assessment of governance risks in digital procurement remains insufficiently addressed and left to undefined risk assessment standards and practices. The only exception concerns cyber security assessments, given the consolidated approach and guidance of the National Cyber Security Centre. This lack of precision in the substantive requirements applicable to data and algorithmic impact assessments clearly constrains the likely effectiveness of the UK’s approach to embedding technology-related impact assessments in the process of adoption of digital technologies for procurement governance (and, more generally, for public governance). In the absence of clear standards, data and algorithmic impact assessments will lead to inconsistent approaches and varying levels of robustness. The absence of standards will also increase the need to access specialist expertise to design and carry out the assessments. Developing such standards and creating an effective institutional mechanism to ensure compliance therewith thus remain a challenge.

The Need for Strengthened Digital Procurement Governance

Both in the EU and the UK, the emerging model of AI risk regulation leaves digital procurement governance to compliance with voluntary measures such as (future) codes of conduct or transparency standards or impose open-ended obligations in areas without clear standards (which reduces the prospect of effective mandatory enforcement). This follows general trends of AI risk regulation and evidences the emergence of a (sub)model highly dependent on self-regulation and self-assessment. This approach is rather problematic.

Self-Regulation: Outsourcing Impact Assessment Regulation to the Private Sector

The absence of mandatory standards for data and algorithmic impact assessments, as well as the embedded flexibility in the standards for cyber security, are bound to outsource the setting of the substantive requirements for those impact assessments to private vendors offering solutions for digital procurement governance. With limited public sector digital capability preventing a detailed specification of the applicable requirements, it is likely that these will be limited to a general obligation for tenderers to provide an impact assessment plan, perhaps by reference to emerging (international private) standards. This would imply the outsourcing of standard setting for risk assessments to private standard-setting organisations and, in the absence of those standards, to the tenderers themselves. This generates a clear and problematic risk of regulatory capture. Moreover, this process of outsourcing or excessively reliance on private agents to commercially determine impact assessments requirements is not sufficiently exposed to scrutiny and contestation.

Self-Assessment: Inadequacy of Mechanisms for Contestability and Accountability

Public buyers will rarely develop the relevant technological solutions but rather acquire them from technological providers. In that case, the duty to carry out the self-assessment will (or should be) cascaded down to the technology provider through contractual obligations. This would place the technology provider as ‘first party’ and the public buyer as ‘second party’ in relation to assuring compliance with the applicable obligations. In a setting of limited public sector digital capability, and in part as a result of a lack of clear standards providing an applicable benchmark (as above), the self-assessment of compliance with risk management requirements will either be de facto outsourced to private vendors (through a lack of challenge of their practices), or carried out by public buyers with limited capabilities (eg during the oversight of contract implementation). Even where public buyers have the required digital capabilities to carry out a more thorough analysis, they lack independence. ‘Second party’ assurance models unavoidably raise questions about their integrity due to the conflicting interests of the assurance provider who wants to use the system (ie the public buyer).

This ‘second party’ assurance model does not include adequate challenge mechanisms despite efforts to disclose (parts of) the relevant self-assessments. Such disclosures are constrained by general problems with ‘comply or explain’ information-based governance mechanisms, with the emerging model showing design features that have proven problematic in other contexts (such as corporate governance and financial market regulation). Moreover, there is no clear mechanism to contest the decisions to adopt digital technologies revealed by the algorithmic disclosures. In many cases, shortcomings in the risk assessments and the related minimisation and mitigation measures will only become observable after the materialisation of the underlying harms. For example, the effects of the adoption of a defective digital solution for decision-making support (eg a recommender system) will only emerge in relation to challengeable decisions in subsequent procurement procedures that rely on such solution. At that point, undoing the effects of the use of the tool may be impossible or excessively costly. In this context, challenges based on procedure-specific harms, such as the possibility to challenge discrete procurement decisions under the general rules on procurement remedies, are inadequate. Not least, because there can be negative systemic harms that are very hard to capture in the challenge to discrete decisions, or for which no agent with active standing has adequate incentives. To avoid potential harms more effectively, ex ante external controls are needed instead.

Creating External Checks on Procurement Digitalisation

It is thus necessary to consider the creation of external ex ante controls applicable to these decisions, to ensure an adequate embedding of effective risk assessments to inform (and constrain) them. Two models are worth considering: certification schemes and independent oversight.

Certification or Conformity Assessments

While not applicable to procurement uses, the model of conformity assessment in the proposed EU AI Act offers a useful blueprint. The main potential shortcoming of conformity assessment systems is that they largely rely on self-assessments by the technology vendors, and thus on first party assurance. Third-party certification (or algorithmic audits) is possible, but voluntary. Whether there would be sufficient (market) incentives to generate a broad (voluntary) use of third-party conformity assessments remains to be seen. While it could be hoped that public buyers could impose the use of certification mechanisms as a condition for participation in tender procedures, this is a less than guaranteed governance strategy given the EU procurement rules’ functional approach to the use of labels and certificates—which systematically require public buyers to accept alternative means of proof of compliance. This thus seems to offer limited potential for (voluntary) certification schemes in this specific context.

Relatedly, the conformity assessment system foreseen in the EU AI Act is also weakened by its reliance on vague concepts with non-obvious translation into verifiable criteria in the context of a third-party assurance audit. This can generate significant limitations in the conformity assessment process. This difficulty is intended to be resolved through the development of harmonised standards by European standardisation organisations and, where those do not exist, through the approval by the European Commission of common specifications. However, such harmonised standards will largely create the same risks of commercial regulatory capture mentioned above.

Overall, the possibility of relying on ‘third-party’ certification schemes offers limited advantages over the self-regulatory approach.

Independent External Oversight

Moving beyond the governance limitations of voluntary third-party certification mechanisms and creating effective external checks on the adoption of digital technologies for procurement governance would require external oversight. An option would be to make the envisaged third-party conformity assessments mandatory, but that would perpetuate the risks of regulatory capture and the outsourcing of the assurance system to private parties. A different, preferable option would be to assign the approval of the decisions to adopt digital technologies and the verification of the relevant risks assessments to a centralised authority also tasked with setting the applicable requirements therefor. The regulator would thus be placed as gatekeeper of the process of transition to digital procurement governance, instead of the atomised imposition of this role on public buyers. This would be reflective of the general features of the system of external controls proposed in the US State of Washington’s Bill SB 5116 (for discussion, see here).

The main goal would be to introduce an element of external verification of the assessment of potential AI harms and the related taking of risks in the adoption of digital technologies. It is submitted that there is a need for the regulator to be independent, so that the system fully encapsulates the advantages of third-party assurance mechanisms. It is also submitted that the data protection regulator may not be best placed to take on the role as its expertise—even if advanced in some aspects of data-intensive digital technologies—primarily relates to issues concerning individual rights and their enforcement. The more diffuse collective interests at stake in the process of transition to a new model of public digital governance (not only in procurement) would require a different set of analyses. While reforming data protection regulators to become AI mega-regulators could be an option, that is not necessarily desirable and it seems that an easier to implement, incremental approach would involve the creation of a new independent authority to control the adoption of AI in the public sector, including in the specific context of procurement digitalisation.

Conclusion

An analysis of emerging regulatory approaches in the EU and the UK shows that the adoption of digital technologies by public buyers is largely unregulated and only subjected to voluntary measures, or to open-ended obligations in areas without clear standards (which reduces the prospect of effective mandatory enforcement). The emerging model of AI risk regulation in the EU and UK follows more general trends and points at the consolidation of a (sub)model of risk-based digital procurement governance that strongly relies on self-regulation and self-assessment.

However, given its limited digital capabilities, the public sector is not best placed to control or influence the process of self-regulation, which results in the outsourcing of crucial regulatory tasks to technology vendors and the consequent risk of regulatory capture and suboptimal design of commercially determined governance mechanisms. These risks are compounded by the emerging ‘second party assurance’ model, as self-assessments by technology vendors would not be adequately scrutinised by public buyers, either due to a lack of digital capabilities or the unavoidable structural conflicts of interest of assurance providers with an interest in the use of the technology, or both. This ‘second party’ assurance model does not include adequate challenge mechanisms despite efforts to disclose (parts of) the relevant self-assessments. Such disclosures are constrained by general problems with ‘comply or explain’ information-based governance mechanisms, with the emerging model showing design features that have proven problematic in other contexts (such as corporate governance and financial market regulation). Moreover, there is no clear mechanism to contest the decisions revealed by the disclosures, including in the context of (delayed) specific uses of the technological solutions.

The analysis also shows how a model of third-party assurance or certification would be affected by the same issues of outsourcing of regulatory decisions to private parties, and ultimately would largely replicate the shortcomings of the self-regulatory and self-assessed model. A certification model would thus only generate a marginal improvement over the emerging model—especially given the functional approach to the use of certification and labels in procurement.

Moving past these shortcomings requires assigning the approval of decisions whether to adopt digital technologies and the verification of the related impact assessments to an independent authority: the ‘AI in the Public Sector Authority’ (AIPSA). I will fully develop a proposal for such authority in coming months.

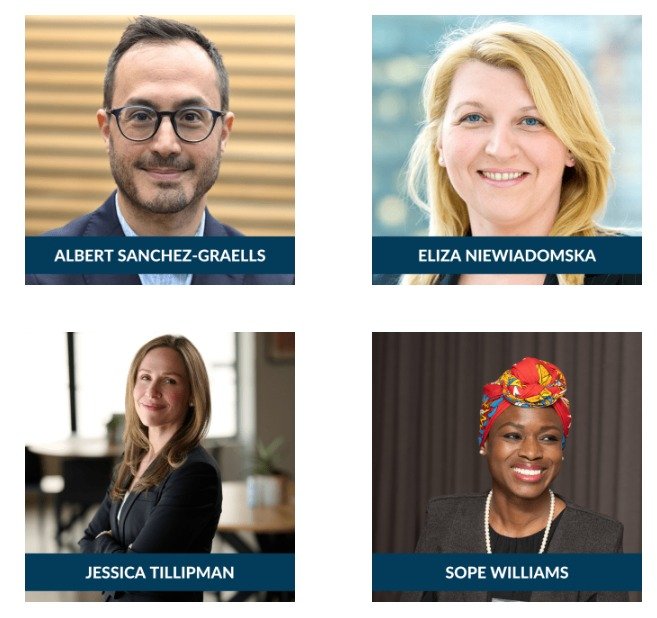

Registration open: TECH FIXES FOR PROCUREMENT PROBLEMS?

As previously announced, on 15 December, I will have the chance to discuss my ongoing research on procurement digitalisation with a stellar panel: Eliza Niewiadomska (EBRD), Jessica Tillipman (GW Law), and Sope Williams (Stellenbosch).

The webinar will provide an opportunity to take a hard look at the promise of tech fixes for procurement problems, focusing on key issues such as:

The ‘true’ potential of digital technologies in procurement.

The challenges arising from putting key enablers in place, such as an adequate big data architecture and access to digital skills in short supply.

The challenges arising from current regulatory frameworks and constraints not applicable to the private sector.

New challenges posed by data governance and cybersecurity risks.

The webinar will be held on December 15, 2022 at 9:00 am EST / 2:00 pm GMT / 3:00 pm CET-SAST. Full details and registration at: https://blogs.gwu.edu/law-govpro/tech-fixes-for-procurement-problems/.

Save the date: 15 Dec, Tech fixes for procurement problems?

If you are interested in procurement digitalisation, please save the date for an online workshop on ‘Tech fixes for procurement problems?’ on 15 December 2022, 2pm GMT. I will have the chance to discuss my ongoing research (scroll down for a few samples) with a stellar panel: Eliza Niewiadomska (EBRD), Jessica Tillipman (GW Law), and Sope Williams (Stellenbosch). We will also have plenty time for a conversation with participants. Do not let other commitments get on the way of joining the discussion!

More details and registration coming soon. For any questions, please email me: a.sanchez-graells@bristol.ac.uk.

Digital technologies, hype, and public sector capability

© Martin Brandt / Flickr.

By Albert Sanchez-Graells (@How2CrackANut) and Michael Lewis (@OpsProf).*

The public sector’s reaction to digital technologies and the associated regulatory and governance challenges is difficult to map, but there are some general trends that seem worrisome. In this blog post, we reflect on the problematic compound effects of technology hype cycles and diminished public sector digital technology capability, paying particular attention to their impact on public procurement.

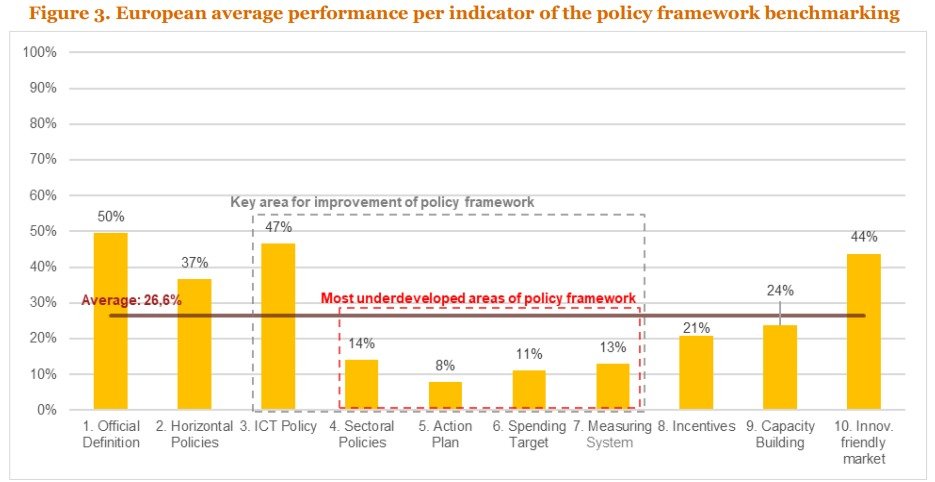

Digital technologies, smoke, and mirrors

There is a generalised over-optimism about the potential of digital technologies, as well as their likely impact on economic growth and international competitiveness. There is also a rush to ‘look digitally advanced’ eg through the formulation of ‘AI strategies’ that are unlikely to generate significant practical impacts (more on that below). However, there seems to be a big (and growing?) gap between what countries report (or pretend) to be doing (eg in reports to the OECD AI observatory, or in relation to any other AI readiness ranking) and what they are practically doing. A relatively recent analysis showed that European countries (including the UK) underperform particularly in relation to strategic aspects that require detailed work (see graph). In other words, there are very few countries ready to move past signalling a willingness to jump onto the digital tech bandwagon.

Source: PWC, The strategic use of public procurement for innovation in the digital economy (2021: 9).

Some of that over-optimism stems from limited public sector capability to understand the technologies themselves (as well as their implications), which leads to naïve or captured approaches to policymaking (on capture, see the eye-watering account emerging from the #Uberfiles). Given the closer alignment (or political meddling?) of policymakers with eg research funding programmes, including but not limited to academic institutions, naïve or captured approaches impact other areas of ‘support’ for the development of digital technologies. This also trickles down to procurement, as the ‘purchasing’ of digital technologies with public money is seen as a (not very subtle) way of subsidising their development (nb. there are many proponents of that approach, such as Mazzucato, as discussed here). However, this can also generate further space for capture, as the same lack of capability that affects high(er) level policymaking also affects funding organisations and ‘street level’ procurement teams. This results in a situation where procurement best practices such as market engagement result in the ‘art of the possible’ being determined by private industry. There is rarely co-creation of solutions, but too often a capture of procurement expenditure by entrepreneurs.

Limited capability, difficult assessments, and dependency risk

Perhaps the universalist techno-utopian framing (cost savings and efficiency and economic growth and better health and new service offerings, etc.) means it is increasingly hard to distinguish the specific merits of different digitalisation options – and the commercial interests that actively hype them. It is also increasingly difficult to carry out effective impact assessments where the (overstressed) benefits are relatively narrow and short-termist, while the downsides of technological adoption are diffuse and likely to only emerge after a significant time lag. Ironically, this limited ability to diagnose ‘relative’ risks and rewards is further exacerbated by the diminishing technical capability of the state: a negative mirror to Amazon’s flywheel model for amplifying capability. Indeed, as stressed by Bharosa (2022): “The perceptions of benefits and risks can be blurred by the information asymmetry between the public agencies and GovTech providers. In the case of GovTech solutions using new technologies like AI, Blockchain and IoT, the principal-agent problem can surface”.

As Colington (2021) points out, despite the “innumerable papers in organisation and management studies” on digitalisation, there is much less understanding of how interests of the digital economy might “reconfigure” public sector capacity. In studying Denmark’s policy of public sector digitalisation – which had the explicit intent of stimulating nascent digital technology industries – she observes the loss of the very capabilities necessary “for welfare states to develop competences for adapting and learning”. In the UK, where it might be argued there have been attempts, such as the Government Digital Services (GDS) and NHS Digital, to cultivate some digital skills ‘in-house’, the enduring legacy has been more limited in the face of endless demands for ‘cost saving’. Kattel and Takala (2021) for example studied GDS and noted that, despite early successes, they faced the challenge of continual (re)legitimization and squeezed investment; especially given the persistent cross-subsidised ‘land grab’ of platforms, like Amazon and Google, that offer ‘lower cost and higher quality’ services to governments. The early evidence emerging from the pilot algorithmic transparency standard seems to confirm this trend of (over)reliance on external providers, including Big Tech providers such as Microsoft (see here).

This is reflective of Milward and Provan’s (2003) ‘hollow state’ metaphor, used to describe "the nature of the devolution of power and decentralization of services from central government to subnational government and, by extension, to third parties – nonprofit agencies and private firms – who increasingly manage programs in the name of the state.” Two decades after its formulation, the metaphor is all the more applicable, as the hollowing out of the State is arguably a few orders of magnitude larger due the techno-centricity of reforms in the race towards a new model of digital public governance. It seems as if the role of the State is currently understood as being limited to that of enabler (and funder) of public governance reforms, not solely implemented, but driven by third parties—and primarily highly concentrated digital tech giants; so that “some GovTech providers can become the next Big Tech providers that could further exploit the limited technical knowledge available at public agencies [and] this dependency risk can become even more significant once modern GovTech solutions replace older government components” (Bharosa, 2022). This is a worrying trend, as once dominance is established, the expected anticompetitive effects of any market can be further multiplied and propagated in a setting of low public sector capability that fuels risk aversion, where the adage “Nobody ever gets fired for buying IBM” has been around since the 70s with limited variation (as to the tech platform it is ‘safe to engage’).

Ultimately, the more the State takes a back seat, the more its ability to steer developments fades away. The rise of a GovTech industry seeking to support governments in their digital transformation generates “concerns that GovTech solutions are a Trojan horse, exploiting the lack of technical knowledge at public agencies and shifting decision-making power from public agencies to market parties, thereby undermining digital sovereignty and public values” (Bharosa, 2022). Therefore, continuing to simply allow experimentation in the GovTech market without a clear strategy on how to reign the industry in—and, relatedly, how to build the public sector capacity needed to do so as a precondition—is a strategy with (exponentially) increasing reversal costs and an unclear tipping point past which meaningful change may simply not be possible.

Public sector and hype cycle

Being more pragmatic, the widely cited, if impressionistic, “hype cycle model” developed by Gartner Inc. provides additional insights. The model presents a generalized expectations path that new technologies follow over time, which suggests that new industrial technologies progress through different stages up to a peak that is followed by disappointment and, later, a recovery of expectations.

Although intended to describe aggregate technology level dynamics, it can be useful to consider the hype cycle for public digital technologies. In the early phases of the curve, vendors and potential users are actively looking for ways to create value from new technology and will claim endless potential use cases. If these are subsequently piloted or demonstrated – even if ‘free’ – they are exciting and visible, and vendors are keen to share use cases, they contribute to creating hype. Limited public sector capacity can also underpin excitement for use cases that are so far removed from their likely practical implementation, or so heavily curated, that they do not provide an accurate representation of how the technology would operate at production phase in the generally messy settings of public sector activity and public sector delivery. In phases such as the peak of inflated expectations, only organisations with sufficient digital technology and commercial capabilities can see through sophisticated marketing and sales efforts to separate the hype from the true potential of immature technologies. The emperor is likely to be naked, but who’s to say?

Moreover, as mentioned above, international organisations one step (upwards) removed from the State create additional fuel for the hype through mapping exercises and rankings, which generate a vicious circle of “public sector FOMO” as entrepreneurial bureaucrats and politicians are unlikely to want to be listed bottom of the table and can thus be particularly receptive to hyped pitches. This can leverage incentives to support *almost any* sort of tech pilots and implementations just to be seen to do something ‘innovative’, or to rush through high-risk implementations seeking to ‘cash in’ on the political and other rents they can (be spun to) generate.

However, as emerging evidence shows (AI Watch, 2022), there is a big attrition rate between announced and piloted adoptions, and those that are ultimately embedded in the functioning of the public sector in a value-adding manner (ie those that reach the plateau of productivity stage in the cycle). Crucially, the AI literacy and skills in the staff involved in the use of the technology post-pilot are one of the critical challenges to the AI implementation phase in the EU public sector (AI Watch, 2021). Thus, early moves in the hype curve are unlikely to translate into sustainable and expectations-matching deployments in the absence of a significant boost of public sector digital technology capabilities. Without committed long-term investment in that capability, piloting and experimentation will rarely translate into anything but expensive pet projects (and lucrative contracts).

Locking the hype in: IP, data, and acquisitions markets

Relatedly, the lack of public sector capacity is a foundation for eg policy recommendations seeking to avoid the public buyer acquiring (and having to manage) IP rights over the digital technologies it funds through procurement of innovation (see eg the European Commission’s policy approach: “There is also a need to improve the conditions for companies to protect and use IP in public procurement with a view to stimulating innovation and boosting the economy. Member States should consider leaving IP ownership to the contractors where appropriate, unless there are overriding public interests at stake or incompatible open licensing strategies in place” at 10).

This is clear as mud (eg what does overriding public interest mean here?) but fails to establish an adequate balance between public funding and public access to the technology, as well as generating (unavoidable?) risks of lock-in and exacerbating issues of lack of capacity in the medium and long-term. Not only in terms of re-procuring the technology (see related discussion here), but also in terms of the broader impact this can have if the technology is propagated to the private sector as a result of or in relation to public sector adoption.

Linking this recommendation to the hype curve, such an approach to relying on proprietary tech with all rights reserved to the third-party developer means that first mover advantages secured by private firms at the early stages of the emergence of a new technology are likely to be very profitable in the long term. This creates further incentives for hype and for investment in being the first to capture decision-makers, which results in an overexposure of policymakers and politicians to tech entrepreneurs pushing hard for (too early) adoption of technologies.

The exact same dynamic emerges in relation to access to data held by public sector entities without which GovTech (and other types of) innovation cannot take place. The value of data is still to be properly understood, as are the mechanisms that can ensure that the public sector obtains and retains the value that data uses can generate. Schemes to eg obtain value options through shares in companies seeking to monetise patient data are not bullet-proof, as some NHS Trusts recently found out (see here, and here paywalled). Contractual regulation of data access, data ownership and data retention rights and obligations pose a significant challenge to institutions with limited digital technology capabilities and can compound IP-related lock-in problems.

A final further complication is that the market for acquisitions of GovTech and other digital technologies start-ups and scale-ups is very active and unpredictable. Even with standard levels of due diligence, public sector institutions that had carefully sought to foster a diverse innovation ecosystem and to avoid contracting (solely) with big players may end up in their hands anyway, once their selected provider leverages their public sector success to deliver an ‘exit strategy’ for their founders and other (venture capital) investors. Change of control clauses clearly have a role to play, but the outside alternatives for public sector institutions engulfed in this process of market consolidation can be limited and difficult to assess, and particularly challenging for organisations with limited digital technology and associated commercial capabilities.

Procurement at the sharp end

Going back to the ongoing difficulty (and unwillingness?) in regulating some digital technologies, there is a (dominant) general narrative that imposes a ‘balanced’ approach between ensuring adequate safeguards and not stifling innovation (with some countries clearly erring much more on the side of caution, such as the UK, than others, such as the EU with the proposed EU AI Act, although the scope of application of its regulatory requirements is narrower than it may seem). This increasingly means that the tall order task of imposing regulatory constraints on the digital technologies and the private sector companies that develop (and own them) is passed on to procurement teams, as the procurement function is seen as a useful regulatory mechanism (see eg Select Committee on Public Standards, Ada Lovelace Institute, Coglianese and Lampmann (2021), Ben Dor and Coglianese (2022), etc but also the approach favoured by the European Commission through the standard clauses for the procurement of AI).

However, this approach completely ignores issues of (lack of) readiness and capability that indicate that the procurement function is being set up to fail in this gatekeeping role (in the absence of massive investment in upskilling). Not only because it lacks the (technical) ability to figure out the relevant checks and balances, and because the levels of required due diligence far exceed standard practices in more mature markets and lower risk procurements, but also because the procurement function can be at the sharp end of the hype cycle and (pragmatically) unable to stop the implementation of technological deployments that are either wasteful or problematic from a governance perspective, as public buyers are rarely in a position of independent decision-making that could enable them to do so. Institutional dynamics can be difficult to navigate even with good insights into problematic decisions, and can be intractable in a context of low capability to understand potential problems and push back against naïve or captured decisions to procure specific technologies and/or from specific providers.

Final thoughts

So, as a generalisation, lack of public sector capability seems to be skewing high level policy and limiting the development of effective plans to roll it out, filtering through to incentive systems that will have major repercussions on what technologies are developed and procured, with risks of lock-in and centralisation of power (away from the public sector), as well as generating a false comfort in the ability of the public procurement function to provide an effective route to tech regulation. The answer to these problems is both evident, simple, and politically intractable in view of the permeating hype around new technologies: more investment in capacity building across the public sector.

This regulatory answer is further complicated by the difficulty in implementing it in an employment market where the public sector, its reward schemes and social esteem are dwarfed by the high salaries, flexible work conditions and allure of the (Big) Tech sector and the GovTech start-up scene. Some strategies aimed at alleviating the generalised lack of public sector capability, e.g. through a GovTech platform at the EU level, can generate further risks of reduction of (in-house) public sector capability at State (and regional, local) level as well as bottlenecks in the access of tech to the public sector that could magnify issues of market dominance, lock-in and over-reliance on GovTech providers (as discussed in Hoekstra et al, 2022).

Ultimately, it is imperative to build more digital technology capability in the public sector, and to recognise that there are no quick (or cheap) fixes to do so. Otherwise, much like with climate change, despite the existence of clear interventions that can mitigate the problem, the hollowing out of the State and the increasing overdependency on Big Tech providers will be a self-fulfilling prophecy for which governments will have no one to blame but themselves.

___________________________________

* We are grateful to Rob Knott (@Procure4Health) for comments on an earlier draft. Any remaining errors and all opinions are solely ours.

Algorithmic transparency: some thoughts on UK's first four published disclosures and the standards' usability

© Fabrice Jazbinsek / Flickr.

The Algorithmic Transparency Standard (ATS) is one of the UK’s flagship initiatives for the regulation of public sector use of artificial intelligence (AI). The ATS encourages (but does not mandate) public sector entities to fill in a template to provide information about the algorithmic tools they use, and why they use them [see e.g. Kingsman et al (2022) for an accessible overview].

The ATS is currently being piloted, and has so far resulted in the publication of four disclosures relating to the use of algorithms in different parts of the UK’s public sector. In this post, I offer some thoughts based on these initial four disclosures, in particular from the perspective of the usability of the ATS in facilitating an enhanced understanding of AI use cases, and accountability for those.

The first four disclosed AI use cases

The ATS pilot has so far published information in two batches (on 1 June and 6 July 2022), comprising the following four AI use cases:

Within Cabinet Office, the GOV.UK Data Labs team piloted the ATS for their Related Links tool; a recommendation engine built to aid navigation of GOV.UK (the primary UK central government website) by providing relevant onward journeys from a content page, with the aim of helping users find useful information and content, aiding navigation.

In the Department for Health and Social Care and NHS Digital, the QCovid team piloted the ATS with a COVID-19 clinical tool used to predict how at risk individuals might be from COVID-19. The tool was developed for use by clinicians in support of conversations with patients about personal risk, and it uses algorithms to combine a number of factors such as age, sex, ethnicity, height and weight (to calculate BMI), and specific health conditions and treatments in order to estimate the combined risk of catching coronavirus and being hospitalised or catching coronavirus and dying. Importantly, “The original version of the QCovid algorithms were also used as part of the Population Risk Assessment to add patients to the Shielded Patient List in February 2021. These patients were advised to shield at that time were provided support for doing so, and were prioritised for COVID-19 vaccination.”

The Information Commissioner's Office has piloted the ATS with its Registration Inbox AI, which uses a machine learning algorithm to categorise emails sent to the Information Commissioner's Office’s registration inbox and to send out an auto-reply where the algorithm “detects … a request about changing a business address. In cases where it detects this kind of request, the algorithm sends out an autoreply that directs the customer to a new online service and points out further information required to process a change request. Only emails with an 80% certainty of a change of address request will be sent an email containing the link to the change of address form.”

The Food Standards Agency piloted the ATS with its Food Hygiene Rating Scheme (FHRS) – AI, which is an algorithmic tool to help local authorities to prioritise inspections of food businesses based on their predicted food hygiene rating by predicting which establishments might be at a higher risk of non-compliance with food hygiene regulations. Importantly, the tool is of voluntary use and “it is not intended to replace the current approach to generate a FHRS score. The final score will always be the result of an inspection undertaken by [a local authority] officer.”

Harmless (?) use cases

At first glance, and on the basis of the implications of the outcome of the algorithmic recommendation, it would seem that the four use cases are relatively harmless, i.e..

If GOV.UK recommends links to content that is not relevant or helpful, the user may simply ignore them.

The outcome of the QCovid tool simply informs the GPs’ (or other clinicians’) assessment of the risk of their patients, and the GPs’ expertise should mediate any incorrect (either over-inclusive, or under-inclusive) assessments by the AI.

If the ICO sends an automatic email with information on how to change their business address to somebody that had submitted a different query, the receiver can simply ignore that email.

Incorrect or imperfect prioritisation of food businesses for inspection could result in the early inspection of a low-risk restaurant, or the late(r) inspection of a higher-risk restaurant, but this is already a risk implicit in allowing restaurants to open pending inspection; AI does not add risk.

However, this approach could be too simplistic or optimistic. It can be helpful to think about what could really happen if the AI got it wrong ‘in a disaster scenario’ based on possible user reactions (a useful approach promoted by the Data Hazards project). It seems to me that, on ‘worse case scenario’ thinking (and without seeking to be exhaustive):

If GOV.UK recommends content that is not helpful but is confusing, the user can either engage in red tape they did not need to complete (wasting both their time and public resources) or, worse, feel overwhelmed, confused or misled and abandon the administrative interaction they were initially seeking to complete. This can lead to exclusion from public services, and be particularly problematic if these situations can have a differential impact on different user groups.

There could be over-reliance on the QCovid algorithm by (too busy) GPs. This could lead to advising ‘as a matter of routine’ the taking of excessive precautions with significant potential impacts on the day to day lives of those affected—as was arguably the case for some of the citizens included in shielding categories in the earlier incarnation of the algorithm. Conversely, GPs that identified problems in the early use of the algorithm could simply ignore it, thus potentially losing the benefits of the algorithm in other cases where it could have been helpful—potentially leading to under-precaution by individuals that could have otherwise been better safeguarded.

Similarly to 1, the provision of irrelevant and potentially confusing information can lead to waste of resource (e.g. users seeking to change their business registration address because they wrongly think it is a requirement to process their query or, at a lower end of the scale, users having to read and consider information about an administrative process they have no interest in). Beyond that, the classification algorithm could generate loss of queries if there was no human check to verify that the AI classification was correct. If this check takes place anyway, the advantages of automating the sending of the initial email seem rather marginal.

Similar to 2, the incorrect prediction of risk can lead to misuse of resources in the carrying out of inspections by local authorities, potentially pushing down the list of restaurants pending inspection some that are high-risk and that could thus be seen their inspection repeatedly delayed. This could have important public health implications, at least for those citizens using the to be inspected restaurants for longer than they would otherwise have. Conversely, inaccurate prioritisations that did not seem to catch more ‘risky’ restaurants could also lead to local authorities abandoning its use. There is also a risk of profiling of certain types of businesses (and their owners), which could lead to victimisation if the tool was improperly used, or used in relation to restaurants that have been active for a longer period (eg to trigger fresh (re)inspections).

No AI application is thus entirely harmless. Of course, this is just a matter of theoretical speculation—as could also be speculated whether reduced engagement with the AI would generate a second tier negative effect, eg if ‘learning’ algorithms could not be revised and improved on the basis of ‘real-life’ feedback on whether their predictions were or not accurate.

I think that this sort of speculation offers a useful yardstick to assess the extent to which the ATS can be helpful and usable. I would argue that the ATS will be helpful to the extent that (a) it provides information susceptible of clarifying whether the relevant risks have been taken into account and properly mitigated or, failing that (b) it provides information that can be used to challenge the insufficiency of any underlying risk assessments or mitigation strategies. Ultimately, AI transparency is not an end in itself, but simply a means of increasing accountability—at least in the context of public sector AI adoption. And it is clear that any degree of transparency generated by the ATS will be an improvement on the current situation, but is the ATS really usable?

Finding out more on the basis of the ATS disclosures

To try to answer that general question on whether the ATS is usable and serves to facilitate increased accountability, I have read the four disclosures in full. Here is my summary/extracts of the relevant bits for each of them.

GOV.UK Related Links

Since May 2019, the tool has been using an algorithm called node2vec (machine learning algorithm that learns network node embeddings) to train a model on the last three weeks of user movement data (web analytics data). The benefits are described as “the tool … predicts related links for a page. These related links are helpful to users. They help users find the content they are looking for. They also help a user find tangentially related content to the page they are on; it’s a bit like when you are looking for a book in the library, you might find books that are relevant to you on adjacent shelves.“

The way the tool works is described in some more detail: “The tool updates links every three weeks and thus tracks changes in user behaviour.” “Every three weeks, the machine learning algorithm is trained using the last three weeks of analytics data and trains a model that outputs related links that are published, overwriting the existing links with new ones.” “The average click through rate for related links is about 5% of visits to a content page. For context, GOV.UK supports an average of 6 million visits per day (Jan 2022). True volumes are likely higher owing to analytics consent tracking. We only track users who consent to analytics cookies …”.

The decision process is fully automated, but there is “a way for publishers to add/amend or remove a link from the component. On average this happens two or three times a month.” “Humans have the capability to recommend changes to related links on a page. There is a process for links to be amended manually and these changes can persist. These human expert generated links are preferred to those generated by the model and will persist.” Moreover, “GOV.UK has a feedback link, “report a problem with this page”, on every page which allows users to flag incorrect links or links they disagree with.” The tool was subjected to a Data Protection Impact Assessment (DPIA), but no other impact assessments (IAs) are listed.

When it comes to risk identification and mitigation, the disclosure indicates: “A recommendation engine can produce links that could be deemed wrong, useless or insensitive by users (e.g. links that point users towards pages that discuss air accidents).” and that, as mitigation: “We added pages to a deny list that might not be useful for a user (such as the homepage) or might be deemed insensitive (e.g. air accident reports). We also enabled publishers or anyone with access to the tagging system to add/amend or remove links. GOV.UK users can also report problems through the feedback mechanisms on GOV.UK.“

Overall, then, the risk I had identified is only superficially identified, in that the ATS disclosure does not show awareness of the potential differing implications of incorrect or useless recommendations across the spectrum. The narrative equating the recommendations to browsing the shelves of a library is quite suggestive in that regard, as is the fact that the quality controls are rather limited.

Indeed, it seems that the quality control mechanisms require a high level of effort by every publisher, as they need to check every three weeks whether the (new) related links appearing in each of the pages they publish are relevant and unproblematic. This seems to have reversed the functional balance of convenience. Before the implementation of the tool, only approximately 2,000 out of 600,000 pieces of content on GOV.UK had related links, as they had to be created manually (and thus, hopefully, were relevant, if not necessarily unproblematic). Now, almost all pages have up to five related content suggestions, but only two or three out of 600,000 pages see their links manually amended per month. A question arises whether this extremely low rate of manual intervention is reflective of the high quality of the system, or the reverse evidence of lack of resource to quality-assure websites that previously prevented 98% of pages from having this type of related information.

However, despite the queries as to the desirability of the AI implementation as described, the ATS disclosure is in itself useful because it allows the type of analysis above and, in case someone considers the situation unsatisfactory or would like to prove it further, there are is a clear gateway to (try to) engage the entity responsible for this AI deployment.

QCovid algorithm

The algorithm was developed at the onset of the Covid-19 pandemic to drive government decisions on which citizens to advise to shield, support during shielding, and prioritise for vaccination rollout. Since the end of the shielding period, the tool has been modified. “The clinical tool for clinicians is intended to support individual conversations with patients about risk. Originally, the goal was to help patients understand the reasons for being asked to shield and, where relevant, help them do so. Since the end of shielding requirements, it is hoped that better-informed conversations about risk will have supported patients to make appropriate decisions about personal risk, either protecting them from adverse health outcomes or to some extent alleviating concerns about re-engaging with society.”

“In essence, the tool creates a risk calculation based on scoring risk factors across a number of data fields pertaining to demographic, clinical and social patient information.“ “The factors incorporated in the model include age, ethnicity, level of deprivation, obesity, whether someone lived in residential care or was homeless, and a range of existing medical conditions, such as cardiovascular disease, diabetes, respiratory disease and cancer. For the latest clinical tool, separate versions of the QCOVID models were estimated for vaccinated and unvaccinated patients.”

It is difficult to assess how intensely the tool is (currently) used, although the ATS indicates that “In the period between 1st January 2022 and 31st March 2022, there were 2,180 completed assessments” and that “Assessment numbers often move with relative infection rate (e.g. higher infection rate leads to more usage of the tool).“ The ATS also stresses that “The use of the tool does not override any clinical decision making but is a supporting device in the decision making process.” “The tool promotes shared decision making with the patient and is an extra point of information to consider in the decision making process. The tool helps with risk/benefit analysis around decisions (e.g. recommendation to shield or take other precautionary measures).”

The impact assessment of this tool is driven by those mandated for medical devices. The description is thus rather technical and not very detailed, although the selected examples it includes do capture the possibility of somebody being misidentified “as meeting the threshold for higher risk”, as well as someone not having “an output generated from the COVID-19 Predictive Risk Model”. The ATS does stress that “As part of patient safety risk assessment, Hazardous scenarios are documented, yet haven’t occurred as suitable mitigation is introduced and implemented to alleviate the risk.” That mitigation largely seems to be that “The tool is designed for use by clinicians who are reminded to look through clinical guidance before using the tool.”

I think this case shows two things. First, that it is difficult to understand how different parts of the analysis fit together when a tool that has had two very different uses is the object of a single ATS disclosure. There seems to be a good argument for use case specific ATS disclosures, even if the underlying AI deployment is the same (or a closely related one), as the implications of different uses from a governance perspective also differ.

Second, that in the context of AI adoption for healthcare purposes, there is a dual barrier to accessing relevant (and understandable) information: the tech barrier and the medical barrier. While the ATS does something to reduce the former, the latter very much remains in place and perhaps turn the issue of trustworthiness of the AI to trustworthiness of the clinician, which is not necessarily entirely helpful (not only in this specific use case, but in many other one can imagine). In that regard, it seems that the usability of the ATS is partially limited, and more could be done to increase meaningful transparency through AI-specific IAs, perhaps as proposed by the Ada Lovelace Institute.

In this case, the ATS disclosure has also provided some valuable information, but arguably to a lesser extent than the previous case study.

ICO’s Registration Inbox AI